Get the report

MoreJuly 29, 2020

There has been increasing buzz in the past decade about the benefits of using a microservice architecture. Let’s explore what microservices are and are not, as well as contrast them with traditional monolithic applications. We’ll discuss the benefits of using a microservices-based architecture and the effort and planning that are required to transition from a monolithic architecture to a microservices architecture.

Finally, we’ll take a detailed dive into the process of deploying a microservice into the AWS ecosystem, and talk about the importance of monitoring during a deployment, and once an application is running in production. We’ll discuss some of the challenges and how you can leverage Sumo Logic’s expertise and tools to streamline the process and get you back to developing the next feature on your roadmap.

The traditional monolithic application contains all of the logic required to run the application. Two main benefits of monolithic applications are 1) a single code base, and 2) efficient interactions between different operations. The monolithic application stores all of its data in a single data source – usually a relational database.

Some of the design aspects that make a monolithic application useful are also at the core of the problems with monolithic applications. When you introduce updates to a single code base, they require a full deployment or release. Given the effort to create, test and deploy a new release, they might comprise an extensive collection of updates, each of which could introduce bugs to the application.

Monolithic applications can also be difficult to scale if your user-base begins to grow. Monolithic applications usually require vertical scaling, wherein we increase the size and capacity of the underlying hardware to handle the additional load.

In the broadest sense, a microservices-based architecture involves breaking your application into individual services, grouped by domain. Organizations that adopt the concept of microservices also embrace a decentralized approach to managing which tools and languages are used, with each service implemented behind an API (Application Programming Interface).

I’m going to draw from my experiences with microservice-based systems to share an example of how to design and construct an eCommerce application. We’ll begin by dividing the work into domains based on common areas of expertise. We could establish the following domains:

Within each of these domains, we begin with the design of a series of API contracts. These contracts define the required inputs, expected outputs, and validation requirements. These documents become the established foundation on which to build the service, and allow related teams to understand the design of the new service before development begins.

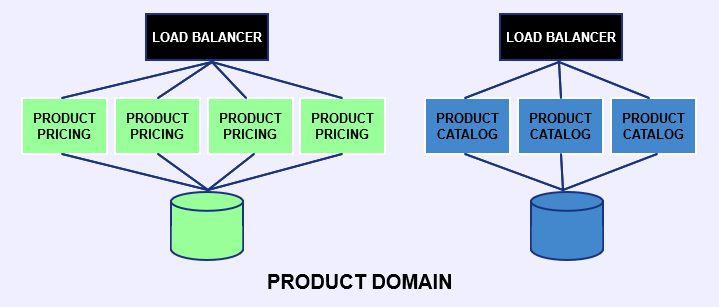

Each service manages its own data store. For instance, the product-pricing service would have sole access to a database that stores the pricing information for the site. Any CRUD (Create, Read, Update, and Delete) operation related to pricing needs to go through the Pricing Service.

Developing a new system from the ground up with microservices can be challenging, and migrating an existing monolithic application to a microservices-based approach may be even more so. Still, the long-term benefits generally outweigh the effort and the risk.

Most teams that adopt microservices work within the agile framework and deploy their services using a continuous integration / continuous deployment (CI/CD) pipeline. This approach allows the team to focus on the most pressing issues at all times, and deploy them to a production environment quickly and on a regular cadence. Unit testing, integration testing, static analysis, and security scans are performed automatically within the pipeline. The overall benefit is that you can get small changes deployed more often, minimizing downtime and improving the overall quality and feature set of your service.

Microservices also lend themselves to horizontal scaling. As the usage of your service increases, you can increase capacity by adding additional instances or containers that execute the identical codebase. Load balancers share the traffic load between all of the systems that are executing the code.

Resiliency and fault-tolerance are tightly related to horizontal scaling. Whereas a monolithic application relies on the uptime on a single highly-provisioned server, we typically deploy microservices on a network of smaller virtual machines or containers. If a specific instance becomes unstable or stops responding, we can task monitoring services with terminating the malfunctioning instance and provisioning a new instance in its place. This approach allows systems to become self-healing and dramatically improve the uptime metrics of your application.

One of the reasons why organizations might consider a migration to a microservices architecture is to move from an on-premise hosting solution into a cloud hosting service, such as Amazon Web Services (AWS).

If you are considering such a move, an excellent place to start is with the 6 Strategies for Migrating Applications to the Cloud. There is no one-size-fits-all solution for organizations wishing to make a move to the cloud, and this article provides initial insights into the various options as well as a synopsis and what is involved.

If you adopt the approach of refactoring or re-architecting your application, you have two options. The first: You can build an entirely new system from the ground up using microservice architecture. The benefit of this approach is that you get an entirely cloud-native system, and you can start fresh with the learnings of your existing monolithic application as a guide of what to do and what not to do. This approach requires a significant investment and won’t deliver results for some time. Nevertheless, if your leadership and corporate sponsors are committed to the long game, this approach can work.

The second approach is to refactor your monolithic application into microservices, gradually. You accomplish this by building specific functionality into a microservice. After you deploy the microservice, you can refactor the monolithic application to use the microservice. The intricacies of this approach is well outside the scope of this text; however, an excellent place to begin that conversation is with this guide from Martin Fowler.

The term, microservices, doesn’t imply a specific type of infrastructure; only that we reduce a system into multiple, smaller services. One of the most cost-effective and performant ways of doing this is with a containerized approach. Docker containers can be built locally, tested and then deployed into a production environment. The advent of orchestration systems like Kubernetes further enhances the ability for teams to build and maintain their microservice-based systems with containers.

Regardless of the approach you select to implement your microservices, you’ll need a way to deploy them into your environment. You’ll need to implement an automated CI/CD pipeline if you want to leverage the benefits of a microservices architecture. We talked about CI/CD earlier, but let’s dig into them a little more.

You can use an open-source tool like Jenkins, CircleCI, and Travis CI to build your pipeline; or you can leverage the efficiencies of AWS CodePipeline to create a pipeline inside your AWS account.

A CI/CD pipeline is an automated process that is triggered when you commit new code into your source-code repository or merge a feature branch into the master branch. The pipeline builds the code, executes a suite of tests against the new build, and then deploys the code into a production environment. A critical component of a comprehensive CI/CD pipeline is post-deployment monitoring to ensure the success of the newly-deployed code. If any part of the pipeline – including the deployment – fails or generates errors, then any updates should be rolled back, and the user alerted about the failures.

AWS has become the de-facto platform of choice for organizations moving their infrastructure into the cloud. A benefit of AWS is that you can deploy your microservices on virtual machines, using their EC2 service. If you adopt the more popular container approach, you can use ECS, AWS Fargate, or EKS, which is the AWS Kubernetes implementation.

Amazon also offers AWS CodePipeline, which is an orchestration tool that allows you to define a CI/CD pipeline directly within your AWS account. AWS CodePipeline can connect AWS CodeCommit, AWS CodeBuild, and AWS CodeDeploy to build, test, and release your updates into production; or, you can substitute your favorite tool at the appropriate place in the pipeline.

[Learn More: AWS Monitoring]

If you’d like to get started with building a pipeline, the https://aws.amazon.com/codepipeline/ is a great place to start. I’ve also found the following resources helpful in different situations.

As you can see, there are many different ways to deploy your code onto the AWS platform. No matter how comprehensive your test suite, or how expensive the static code analysis or security scanning systems you include as part of the pipeline, code updates and their related deployments aren’t guaranteed to be perfect.

The most critical step in your deployment pipeline and the continued maintenance is the active monitoring of your system. Within AWS, you have access to AWS CloudWatch, which is the central service for logs from all of the services within your account. AWS CloudWatch is a goldmine of information. The breadth of service metrics and sheer volume make AWS CloudWatch both powerful and unwieldy at the same time.

Many organizations have decided to partner with a third-party provider that specializes in the analysis of metrics and utilizes machine learning to identify actual and potential problems. Sumo Logic is such a provider, with a depth of experience in the collection and intelligent analysis of AWS data. In most cases, this process is as simple as installing the appropriate Sumo Logic app and creating an auditing role with AWS IAM (Identity and Access Management.

Reduce downtime and move from reactive to proactive monitoring.

Build, run, and secure modern applications and cloud infrastructures.

Start free trial