Get the report

MoreOctober 16, 2019

This document is meant for executives (CIO/CISO/CxO) and IT professionals who are seeking to understand the business use case for Kubernetes. The document addresses topics like:

Many enterprises adopting Kubernetes realize that Kubernetes is the first step to building scalable modern applications. To get good value from Kubernetes, enterprises need solutions that can monitor and secure Kubernetes applications. Sumo Logic provides the industry’s first Continuous Intelligence Solution for Kubernetes to help enterprises control and manage their Kubernetes deployments. To learn more, please refer to the SumoLogic Kubernetes eBook here.

Monitoring, troubleshooting and securing Kubernetes with Sumo Logic

Marc Andressen famously said, "Software is eating the world." Today, every enterprise is a software business, and the enterprise CIO is tasked with delivering applications with high quality and customer experience that rival those of Amazon, Google or Netflix.

What these applications share in common to drive great customer experiences is the need for constant change to keep those experiences fresh, easy and relevant. This requires DevSecOps to constantly release secure updates to implement improvements, fix issues, and drive new features and capabilities. According to the 2019 DevOps Research & Assessment (DORA) State of DevOps Report, elite performers -- companies that deploy multiple times per day, release code 208 times faster than low performers (companies that deploy once a month or twice a year). Some of the industry’s leading digital experiences -- Amazon, Google or Netflix, for example -- deploy on average 11.7 seconds in an effort to gain competitive value through increase usage or revenue.

This need for “speed and agility of innovation” is driving the way companies are building, running and securing their modern applications, which is transforming the software architecture into micro-services to enable faster change overall. Micro-services depend on containerized application and orchestration -- automation -- to speed deployment of improvements and new capabilities essential to maintaining highly available, secure customer experiences.

While the business benefits of digital transformation and software innovation are clearly understood, the IT capabilities needed to deliver these benefits are still evolving. What is very clear is that containers are becoming a must-have platform in the IT architecture. Containers offer benefits of immutable infrastructure with predictable, repeatable, and faster development and deployments. With these capabilities, Containers change the way applications are being architected, designed, developed, packaged, delivered, and managed,paving the way to better application delivery and experience.

But the very strength of containers can become its Achilles heel: it’s very easy to create lots--and we mean lots”--of containers across your apps. And now we have a new problem--how does one manage thousands or even tens of thousands of these containers? How do you control the ephemeral containers that have lifetimes of a few seconds to minutes? How do you optimize resource utilization in a large-scale containerized environments? The answer is Orchestration and Kubernetes.

In a nutshell, Kubernetes is a system for deploying applications and more efficiently utilizing the containerized infrastructure that powers the apps. Kubernetes can save organizations money because it takes less manpower to manage IT; it makes apps more resilient and performant.

You can also run Kubernetes on-premises or within public Cloud. AWS, Azure, and GCP offer managed Kubernetes solutions to help customers get started quickly and efficiently operate K8s apps. Kubernetes also makes apps a lot more portable, so IT can move them more easily between different clouds and internal environments.

In a nutshell, Kubernetes is the new Linux OS of the Cloud.

Google created Kubernetes and it is now part of CNCF, with very active engagement and contribution from many enterprises large and small.

[Learn More: Kubernetes Business Benefits]

In two words: Hell Yeah!

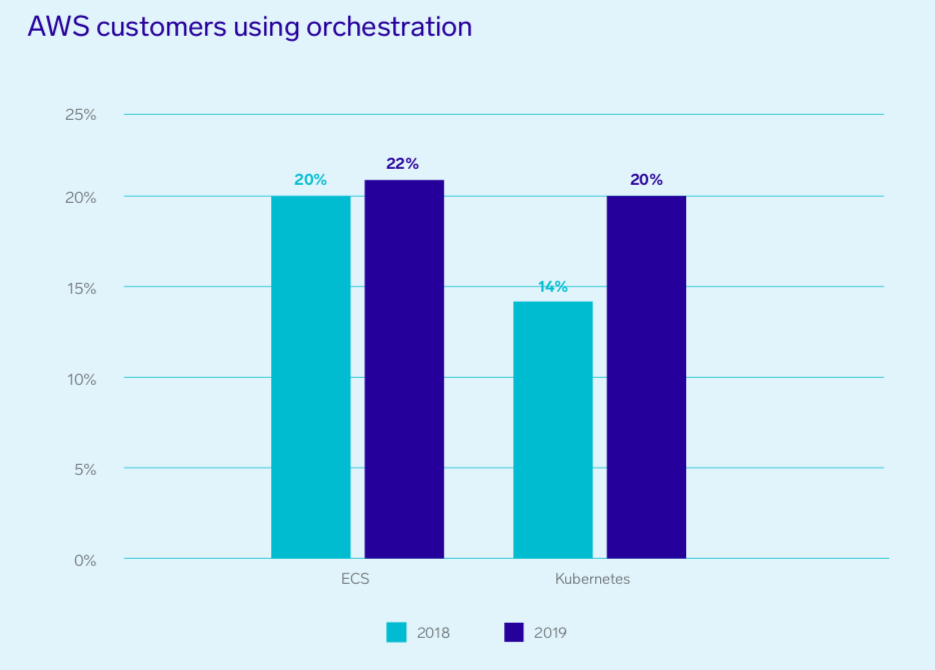

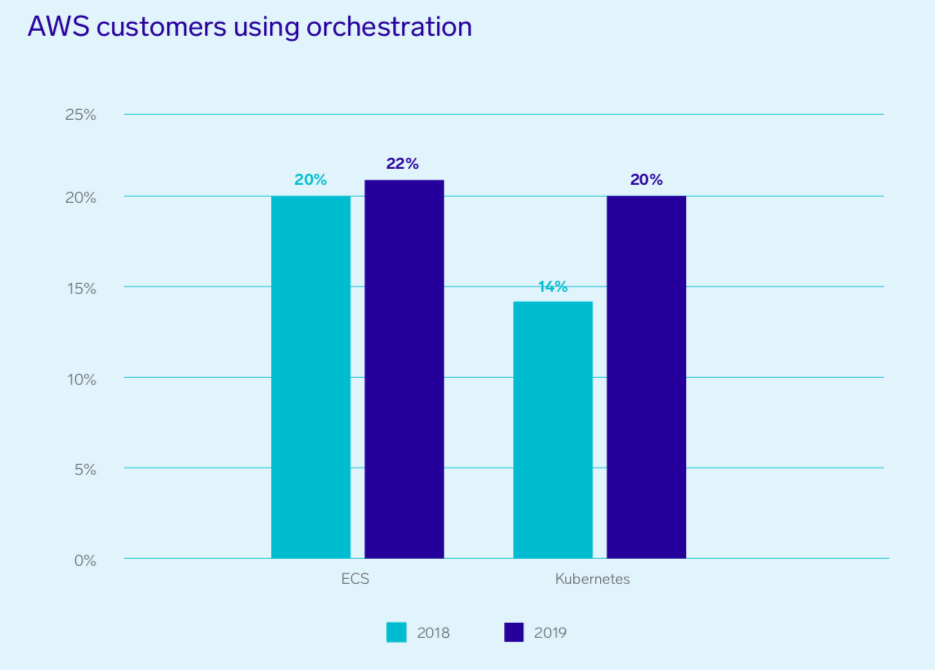

Several datapoints show rapid Kubernetes adoption. Sumo Logic’s fourth annual Continuous Intelligence Report on "The State of Modern Applications and DevSecOps in the Cloud” highlights some cool adoption data on Kubernetes within enterprises. The report states that K8s is seeing increased adoption in on-premise as well as cloud-based environments. In fact, 1 in 3 enterprises in AWS cloud today use Kubernetes as their key orchestration solution.

Here are five fundamental business capabilities that Kubernetes can drive in the enterprise–be it large or small. And to add teeth to these use cases, we have identified some real world examples to validate the value that enterprises are getting from their Kubernetes deployments

Let's look at the values in greater detail next.

Kubernetes enables a “microservices” approach to building apps. Now you can break up your development team into smaller teams that focus on a single, smaller microservice. These teams are smaller and more agile because each team has a focused function. APIs between these microservices minimize the amount of cross-team communication required to build and deploy. So, ultimately, you can scale multiple small teams of specialized experts who each help support a fleet of thousands of machines.

Kubernetes also allows your IT teams to manage large applications across many containers more efficiently by handling many of the nitty-gritty details of maintaining container-based apps. For example, Kubernetes handles service discovery, helps containers talk to each other, and arranges access to storage from various providers such as AWS and Microsoft Azure.

Real World Case Study

Airbnb’s transition from a monolithic to a microservices architecture is pretty amazing. They needed to scale continuous delivery horizontally, and the goal was to make continuous delivery available to the company’s 1,000 or so engineers so they could add new services. Airbnb adopted Kubernetes to support over 1,000 engineers concurrently configuring and deploying over 250 critical services to Kubernetes. The net result is that AirBnb can now do over 500 deploys per day on average.

Tinder: One of the best examples of accelerating time to market comes from Tinder. This blog post describes Tinder’s K8 journey well. And here’s the cliff notes version of the story: Due to high traffic volume, Tinder’s engineering team faced challenges of scale and stability. And they realized that the answer to their struggle is Kubernetes. Tinder’s engineering team migrated 200 services and ran a Kubernetes cluster of 1,000 nodes, 15,000 pods, and 48,000 running containers. While the migration process wasn't easy, the Kubernetes solution was critical to ensure smooth business operations going further.

Kubernetes can help your business cut infrastructure costs quite drastically if you’re operating at massive scale. Kubernetes makes a container-based architecture feasible by packing together apps optimally using your cloud and hardware investments. Before Kubernetes, administrators often over-provisioned their infrastructure to conservatively handle unexpected spikes, or simply because it was difficult and time consuming to manually scale containerized applications. Kubenetes intelligently schedules and tightly packs containers, taking into account the available resources. It also automatically scales your application to meet business needs, thus freeing up human resources to focus on other productive tasks.

There are many examples of customers who have seen dramatic improvements in cost optimization using K8s.

Real World Case Study

Spotify is an early K8s adopter and has significant cost saving values by adopting K8s as described in this note. Leveraging K8s, Spotify has seen 2-3x CPU utilization using the orchestration capabilities of K8s, resulting in better IT spend optimization.

Pinterest is another early K8s customer. Leveraging K8s, the Pinterest IT team reclaimed over 80 percent of capacity during non-peak hours. They now use30 percent less instance-hours per day compared to the static cluster.

The success of today’s applications does not depend only on features, but also on the scalability of the application. After all, if an application cannot scale well, it will be highly non-performant at best scale, and totally unavailable, at the worst case.

As an orchestration system, Kubernetes is a critical management system to “auto-magically” scale and improve app performance. Suppose we have a service which is CPU-intensive and with dynamic user load that changes based on business conditions (for example, an event ticketing app that will see dramatic users and loads prior to the event and low usage at other times). What we need here is a solution that can scale up the app and its infrastructure so that new machines are automatically spawned up as the load increases (more users are buying tickets) and scale it down when the load subsides. Kubernetes offers just that capability by scaling up the application as the CPU usage goes above a defined threshold - for example, 90 percent on the current machine. And when the load reduces, Kubernetes can scale back the application, thus optimizing the infrastructure utilization. The Kubernetes auto-scaling is not limited to just infrastructure metrics; any type of metric--resource utilization metrics - even custom metrics can be used to trigger the scaling process.

Real World Case Study

LendingTree: Here’s a great article from LendingTree. LendingTree has many microservices that make up its business apps. LendingTree uses Kubernetes and its horizontal scaling capability to deploy and run these services, and to ensure that their customers have access to service even during peak load. And to get visibility into these containerized and virtual services and monitor its Kubernetes deployment, LendingTree uses Sumo Logic

One of the biggest benefits of Kubernetes and containers is that it helps you realize the promise of hybrid and multi-cloud. Enterprises today are already running multi-cloud environments and will continue to do so in the future. Kubernetes makes it much easier is to run any app on any public cloud service or any combination of public and private clouds. This allows you to put the right workloads on the right cloud and to help you avoid vendor lock-in. And getting the best fit, using the right features, and having the leverage to migrate when it makes sense all help you realize more ROI (short and longer term) from your IT investments.

Need more data to validate the multi-cloud and Kubernetes made-in-heaven story? This finding from the Sumo Logic Continuous Intelligence Report identifies a very interesting upward trend on K8 adoption based on the number of cloud platforms organizations use, with 86 percent of customers on all three using managed or native Kubernetes solutions. Should AWS be worried? Probably not. But, it may be an early sign of a level playing field for Azure and GCP--because apps deployed on K8s can be easily ported across environments (on-premise to cloud or across clouds).

Real World Case Study

Gannett/USA Today is a great example of a customer who is using Kubernetes to operate multi-cloud environments across AWS and Google Cloud platform. At the beginning, Gannett was an AWS shop. Gannett moved to Kubernetes to support their growing scale of customers (they did 160 deployments per day during the 2016 presidential news season!), but as their business and scaling needs changed, Gannett used the fact that they are deployed on Kubernetes in AWS to seamlessly run the apps in GCP.

Whether you are rehosting (lift and shift of the app), replatforming (make some basic changes to the way it runs), or refactoring (the entire app and the services that support it are modified to better suit the new compartmentalized environment), Kubernetes has you covered.

Since K8s runs consistently across all environments, on-premise and clouds like AWS, Azure and GCP, Kubernetes provides a more seamless and prescriptive path to port your application from on-premise to cloud environments. Rather than deal with all the variations and complexities of the cloud environment, enterprises can follow a more prescribed path:

Real World Case Study

Shopify started as a data center based application and over the last few years has completely migrated all their application to Google Cloud Platform. Shopify first started running containers (docker); the next natural step was to use K8s as a dynamic container management and orchestration system.

The first step is to start with a minimized host operating system, which has only the services required to run containers. (There is no problem with using a full operating system—That option just has more services which will need to be monitored, configured, and patched.) Examples of minimized systems would be Red Hat Project Atomic, CoreOS Container Linux, Rancher OS, and Ubuntu Core.

Next, you’ll need to have SELinux enabled, which allows better isolation between processes. Most Kubernetes distributions enable SELinux by default. Another layer of security available seccomp, which can restrict the actual system calls that an individual process can make by assigning profiles.

As a side note, if you are using a container service on a public cloud provider, host security is not as important as the other layers of security, as containers are usually a single tenant system. This means that each container is actually running in its own VM so it will always have the latest OS patches and have no process isolation concerns.

Microservice-based architectures continue to increase in popularity for the building and deploying applications and services in a container environment. They involve multiple containers interacting within pods and across hosts to provide the full suite of required business functionality.

Most public cloud providers use a single-tenant model for their container runtimes and leverage the access control lists and security groups they have built for their existing compute environments. Using this type of deployment where access can easily be controlled at the point the compute node accesses the network, it is easy to group similar containers under one group, or ACL, and limit access or provide extra security features like Web Application Firewalls and API Gateways.

In a multi-tenant environment where multiple containers can and will run on the same host, a higher level of network segmentation is needed. Multi-tenant environments are more common in private and hybrid cloud deployments where there is more control of the hardware layer and higher density is a goal.

Unlike in a single-tenant model, traffic can not simply be controlled as it enters the individual compute nodes, as there can be traffic within the individual nodes between container processes that also need to be limited. Kubernetes and the industry have both proven and new networking plugins that can handle this type of traffic. These are always based on SDN (Software-Defined Networking), and range from Open vSwitch (OVS) to Project Calico and the up-and-coming Istio.

For the purposes of this article’s focus on Kubernetes, we are going to assume that best practices are being followed on the coding side and only address the building, storing, and running of the container images. For more information on secure coding best practices, both the CMU Software Engineering Institute and OWASP have lists to get you started.

First, let’s address container registries. The best place to retrieve container images to either use as a base for in-house development, or to run as-is, is from known and trusted public registries. While these images hosted may not be perfect, they have enough eyes looking at them that security issues are often found and resolved in a timely manner, especially on larger projects. The largest and most prevalent of the public Docker registries is Docker Hub.

Most organizations rely on private registries as part of their container strategy. Private registries allow RBAC and other enterprise-friendly features (such as on-premises), which allow use in even the most highly secure environments. Most organizations will even store the container images from the public registry in their private registry to ensure they have a copy of what is running in their environment. You never know when their might be an outage on a public registry or a project might be unavailable.

Using a private registry provides the opportunity to have a repository that can be scanned for known vulnerabilities. Ideally images will be scanned before they are stored in this registry and as they are retrieved (new issues may have happened since the last build) to be deployed by Kubernetes. Black Duck Software and SonarSource are among the companies that provide solutions to scan applications and container images.

Kubernetes includes cluster-based logging which can be shipped to a centralized logging facility. These logs become increasingly valuable when combined with the application logs that are consolidated to the same centralized logging facility. Having a single location for log storage and analysis allows better trending and rapid detection of security and other application incidents.

So there you have it. Five reasons why every CIO should consider Kubernetes now. With some real-world values to boot.

But what happens after I deploy Kubernetes, you ask. How do I manage Kubernetes? How do I get visibility into Kubernetes? How do I proactively monitor the performance of apps in Kubernetes? How do I secure my application in Kubernetes? That’s where Sumo Logic comes in.

Sumo Logic has a solution built to help your teams get the most out of Kubernetes and accelerate your digital transformation. The solution provides discoverability, observability, and security of your Kubernetes implementation and helps you manage your apps better. Want to know about Sumo Logic and our Kubernetes solution? Sign up for our service or read more in this easy to understand Kubernetes eBook.

Monitoring, troubleshooting and securing Kubernetes with Sumo Logic

Monitor, troubleshoot and secure your Kubernetes clusters with Sumo Logic cloud-native SaaS analytics solution for K8s.

Learn more