Get the report

More

Maintaining log management best practices requires constant awareness of how technology advances. Your cloud app doesn’t exist in isolation — and that’s a good thing. Information like authentications, connections, access and errors represent the essential log data that informs new operations efficiencies and the best approach to security.

But these insights only manifest when logs are appropriately managed, stored and analyzed on a single platform. Log management best practices for your modern apps and infrastructure include centralized logging, structured logging and functions related explicitly to unique programming languages or network structures.

Universally, today’s best practices include log management procedures and tools that merge insights across DevOps and security teams. Throughout this article, we’ll talk about best practices in different situations to help you do more with your data this year and beyond.

Determining what the best practices are for logging and monitoring is unique to each company. The best approach depends on the network, app components, devices and resources that make up the environment. With that said, centralizing your logs from across the network simplifies log management for networks of all types — cloud, multi-cloud, hybrid or on-premises. Converting logs to a structured format ready for analysis by machine learning helps companies across industries claim a competitive advantage. We’ll cover these two best practices and some application-specific details in the following sections.

Centralized

logging takes place through a tool that collects data from diverse

sources across the IT infrastructure. Data moves from various sources

into a public cloud or third-party storage solution. DevOps and security

teams should aggregate logs from a variety of sources, including:

Switches, routers and access points to identify misconfiguration and other issues.

Web server data to reveal the finer details of customer acquisition and behavior.

Security data such as logs from intrusion systems and firewalls to pinpoint and address security risks before they become concerns.

Cloud infrastructure and services logs to assess if current resources are adequate and help address latency issues or other needs.

Application-level logs to help determine where errors may require resolution, i.e., payment gateways, in-app databases and other features.

Server logs from databases like PostgreSQL, MongoDB, MySQL and more reveal user experience issues and other performance challenges.

Container logs from Kubernetes or Docker provide close monitoring of a dynamic environment to help organizations balance resource demands with costs and address issues quickly.

What is the benefit of centralized logging? Relocating logs into a centralized tool means information silos break down. Instead, log correlation, normalization and analysis within a single tool generate insights in context. Such oversight allows teams to proactively identify and address critical needs within the application, including addressing SLA, performance issues and availability problems.

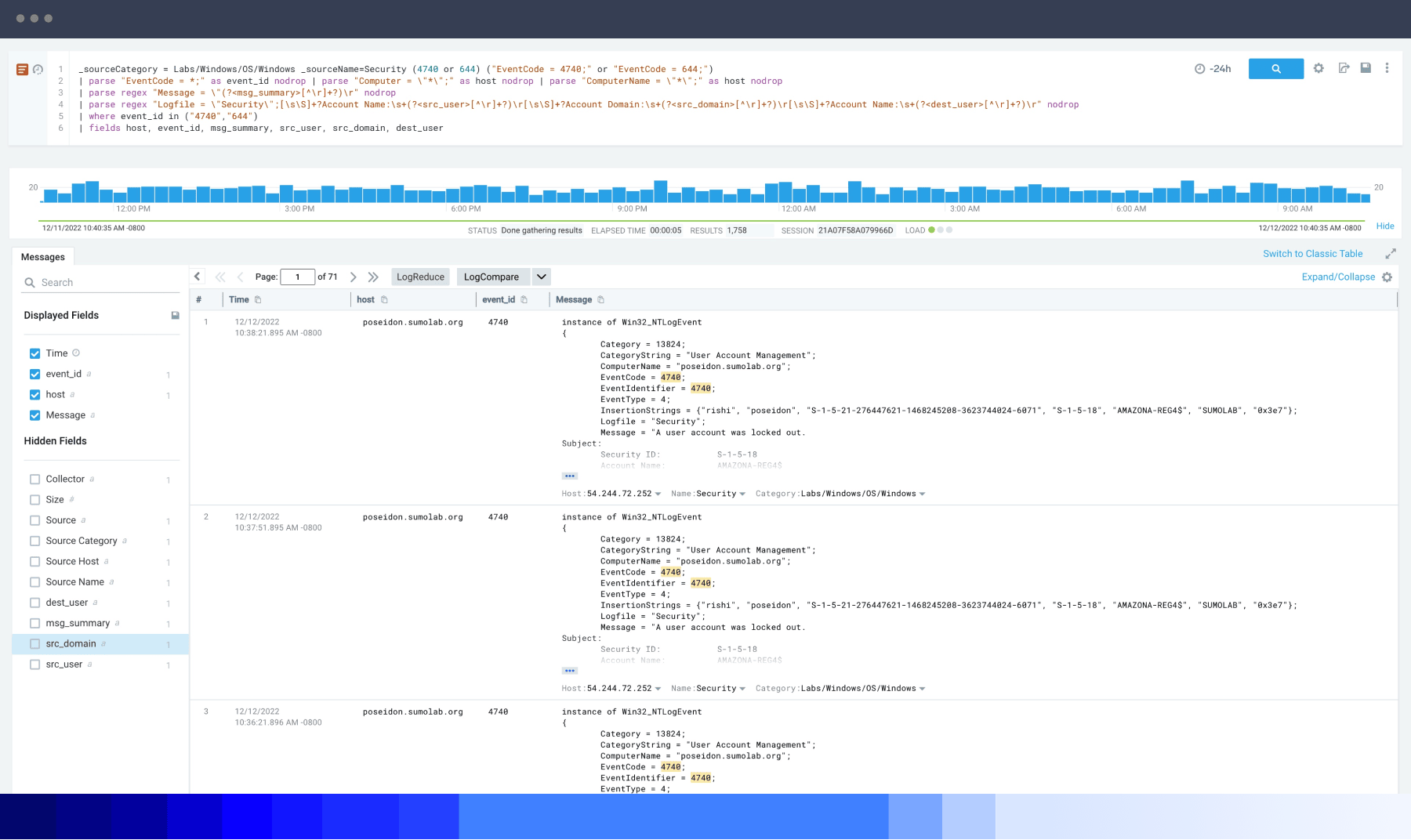

The right log management platform makes this effort significantly simpler. Sumo Logic offers detection tools like LogReduce® and LogCompare to extract critical insights from mountains of data. Powerful outlier detection lets you eliminate the white noise and find the true unknowns affecting your system. Automated service mapping reveals the exact dependencies and traces connected to a specific issue, generating a high-level picture of the health of your microservices infrastructure. Our Root Cause Explorer uses correlated telemetry to help experts go from being aware of an issue to understanding its potential roots within a few moments. Suppose you’re wondering which tool is used for logging in microservices architecture, with multiple clouds or pulling logs from third-party systems. In that case, Sumo Logic is a leading choice for centralized logging.

Structured logging means converting data into a format capable of machine learning analysis. Log data as a plain text file can’t be queried or filtered to deliver insights. Structured Logs in a more parsable format like XML or JavaScript Object Notation (JSON) make the data usable for monitoring, troubleshooting and investigations.

Some logs may come to you with a predetermined structure from the vendor, while others will be custom logs created internally. Having all these logs in a consistent format is essential, and this conversion is what log management means in practice for many developers and organizations. Even if the custom logs from your platform export in a structured format, other logs require updating and reformatting to allow mastery of the data.

Structured logging makes data more usable by making logs

more specific. Key values identified and named within the log equip an

algorithm to detect patterns or filter results. Log structure benefits

from the same five elements as every other good piece of reporting: who,

what, where, when and why.

Who: Username(s) or other ID associated with the log.

What: Error code, impact level, source IP, etc.

Where: Specific gateway or hostname within your infrastructure.

When: Timestamp

Why: This is what your log management platform will help you figure out!

Remember, logs are not data written down in plain text. Instead, a log

will be documented in a specific format that both humans and automation

can understand. Click here to learn more about how structured logging clarifies the meaning of your logs and see an example of a structured vs. unstructured log.

Generally speaking, another high-level logging best practice is that you should never write your logs to files manually. Automated output paired with parsing tools helps you convert the logs from an unstructured to a structured format.

Here are some more short mentions of basic best practices specific to certain programming languages, network structures or applications:

When logging in C or C#, make sure logs go to the cloud, not the console. Use structured logging to preserve future users' contextual and diagnostic data.

The REST API assembly of resources helps systems exchange information online. The JSON log structure is generally considered the best approach to REST API logging.

Node.js functions for server-side programming, where efficient logging can sometimes be a puzzle. You can automate the inclusion of timestamps, tags and other information in these logs. Learn more about Node.js logging best practices.

Amazon Web Services (AWS) logging best practices start with centralizing logs, analytics and security to keep software running efficiently and safely. Learn more about AWS monitoring and logging.

What is log management in cybersecurity? It refers to keeping track of unexpected events and occurrences to identify and manage risks. Log analysis turns this recordkeeping into valuable insights about your system’s security and threats. Analyze security logs at recurring intervals, sometimes in tandem with development sprints, to provide insights and reveal errors to address. Security information and event management (SIEM) is a specialized field within cybersecurity to monitor real-time alerts from hardware and software. Here are some of the security (and, more specifically, SIEM logs) most important to manage and centralize.

Firewall logs: Today’s firewalls deliver rich data on threats and malware, application types, command and control (C2), and more. Make sure to monitor all firewall logs, both internal and external.

Proxy and web filtering logs: If your firewall does not include these logs, it’s important to monitor IP, URL and domain information for threat hunters to understand any connections to bad locations. User-agent logs are also invaluable in untangling major breaches and issues.

Network security products: If you have standalone systems for other network data like intrusion protection (IPS), intrusion detection (IDS) or network data loss prevention (DLP), centralize these logs for analysis with the rest of the information.

Network sensors: Sensors like a Network Test Access Point (TAP) or Switched Port Analyzer (SPAN) give deeper and more specific data about traffic flows. These logs help users track events they otherwise might not have, such as the lateral movement of a threat in an internal system.

Windows authentication: Tracking a user’s Windows authentication ties one user to the event in the system, even if they change IP addresses in the middle of their activity.

Endpoint security solutions: SIEM data from each device connected to your network can save experts time following up on alerts that are not a threat. For instance, if an Oracle alert stems from a device that does not run Oracle, knowing this alert does not need investigation saves time. On the other hand, if an alert from one or more endpoints corresponds with other anomalies, this provides more information for the investigation.

Threat intelligence: Threat intelligence includes logs and other data connected to recent threats at other organizations. You can access some data for free, while paid lists are sometimes better maintained. Introducing this data to your log analytics platform trains the algorithm to recognize similar patterns or behaviors faster in the future.

Careful maintenance and monitoring of these logs is a best practice, and for US federal government agencies and contractors, it may be part of NIST 800-53 logging requirements. The National Institute of Standards and Technology (NIST) is an agency of the US Department of Commerce. NIST’s mission is to enable innovation and competitiveness. NIST 800-53 logging and monitoring requirements are some of the controls that create secure and resilient systems where this growth can occur. However, they are not the only ones! Using a log management system like Sumo Logic takes compliance worries off your mind and can align organizations with standards for their confidence. We are not only FedRAMP Moderate authorized, but also SOC 2 compliant, HIPPA certified, PCI DSS compliant, and ISO 27001 certified.

Log management enables and improves log analysis. Once you have logs managed, organized and parsed, applying analytics best practices generates insights and actionable steps that translate the effort into better business outcomes. Data centralization, automated tagging, pattern recognition, correlation analysis and artificial ignorance emerge as some of the best practices for log analysis. Here’s what each boils down to on a basic level.

Data centralization: Bring all logs together in one centralized platform.

Automated tagging and classification: Logs should be classified automatically and ordered for future analysis.

Pattern recognition and actionable reporting: Use machine learning to identify log patterns and get insight into the next best steps.

Machine-learning-driven correlation analysis: Collating logs from different sources gives a complete picture of each event and allows the identification of connections between disconnected data.

Artificial ignorance: Use tools trained to ignore routine logs and send alerts when something new occurs or something planned does not occur as scheduled.

Sumo Logic delivers a turnkey SaaS analytics platform with these best practices implemented and ready to leverage.

Sumo Logic is on a mission to help organizations achieve the reliability and security goals for their applications and operations. Trusted by thousands of global companies to secure and protect against modern threats, we also provide real-time insights to improve application performance and monitor and troubleshoot quickly. With hundreds of out-of-the-box native integrations, Sumo Logic makes managing and analyzing log files easy. Centralizing logs in one place empowers teams to accelerate innovation without compromising reliability or security. We offer a 30-day free trial with free training and certification; no credit card required. Watch a demo to see Sumo in action, or sign up today to explore the platform with your data and logs.