Get the report

MoreDocker is an open-source containerization platform for virtualization. Containers provide a means of enclosing an application, including its filesystem, into a single, replicable package. Initiated in 2013 by Docker founder Solomon Hykes, the Docker platform was built for the Linux operating system and has since achieved widespread popularity among developers and cloud service providers for its ability to simplify and automate the creation and deployment of containers.

Containers are not a new technology. Like virtual machines (VM), a Docker container is a form of virtualization that has been around for years. But because container technology allows developers to package an application, along with its dependencies, into a standardized unit, containers are quickly becoming a preferred approach to virtualization. Automation through Docker has been critical to that success.

While a VM creates a whole virtual operating system, a container brings along only the files required to run integrations not running on the host computer. They can run lean by sharing the kernel of the system they run on, and where possible, they even share dependencies between apps. That means smoother performance, smaller application size and faster deployment.

There are several reasons why your Docker container may refuse to connect. For example, you may need to publish the exposed ports using the following option: -p (lowercase) or --publish=[]. This will tell Docker to use ports you manually set and map them to the exposed container's ports. This is important because you already know which ports are mapped rather than inspecting which ones and making adjustments. Another common issue is running proxy redirects on NGINX on different networks. In this scenario, you may create your network and add both containers using Docker run commands.

This is a common problem encountered by new Docker users. It means you may have started a container that uses the same parameters. You can either delete the first container or choose a new name for the container.

This is a problem that Docker users encounter when there are multiple network adaptors present for the host, and the priority of the adaptor is misconfigured. You can run a command to display the network adaptors and troubleshoot, and, in some instances, check to see if you have the most up-to-date SDK installed. The default Docker config file location is %programdata%\docker\config\daemon. JSON.

Docker plugins are out-of-process extensions that add capabilities to the Docker Engine. A plugin is a process running on the same or a different host as the docker daemon, which registers itself by placing a file on the same docker host in one of the plugin directories.

Docker Build: Whenever you create an image, you use Docker Build. It is a key part of your software development life cycle, allowing you to package and bundle your code and ship it anywhere.

Docker Toolbox: If you're using Docker on a system that does not meet minimum system requirements for the Docker for Windows app, Docker Toolbox is an installer for quick setup and launch of a Docker environment on older Mac and Windows systems.

Docker API: The Engine API is an HTTP API served by Docker Engine. It is the API the Docker client uses to communicate with the engine, so it can do everything the Docker client can do with the Docker API.

Docker ps: The 'docker ps' is a Docker command to list the running containers by default.

Docker Registry: Docker Registry is a stateless, highly scalable server-side application that stores and lets you distribute Docker images.

Docker Command Line Interface (Docker CLI): A command line tool that lets you talk to the Docker daemon.

Docker Swarm: A Docker Swarm is a group of physical or virtual machines running the Docker application configured to join together in a cluster.

Docker Hub: Docker Hub is a cloud-based repository that lets you create, test, store and deploy Docker container images.

What is Docker Engine?

The Docker container is the component that delivers efficiencies, and Docker Engine is what makes it all possible. In short, an image file runs a Docker container — essentially a file created to run a particular application in a specific operating system. Docker Engine uses that image file to build a container and run it.

The lightweight Docker Engine is the real innovation that has made Docker a successful tool. Its ability to automate the deployment of containers has brought the technology to prominence. It offers the benefit of greater scalability in virtualized environments and allows for faster builds and testing by development teams.

Docker Compose is a tool used to define and run multi-container applications with Docker. You can quickly use a single command to create or start all services from your configuration using Docker Composer and a YAML file.

Examples of common Docker compose queries include:

Docker Compose logs

Docker Compose logs follow tail

Docker Compose memory limit

Docker Compose network name

Docker Compose tags

DevOps teams gain several advantages by using Docker. With instant OS resource startup and enhanced reliability, the platform is well-suited to the quick iterations of agile development teams. Development environments are consistent across the team, using the same binaries and language runtime.

Because containerized applications are consistent across systems in their resource use and environment, DevOps engineers can be confident an integration will work similarly in production as it does on their machine. Docker containers also help to solve compiling issues and simplify the use of multiple language versions in the development process.

Docker containers represent the new way of packaging an application and all its dependencies into a standardized unit for deployment. The key to successfully deploying container applications is continuously monitoring the Docker environment. You need a centralized approach to log management using container-aware monitoring tools.

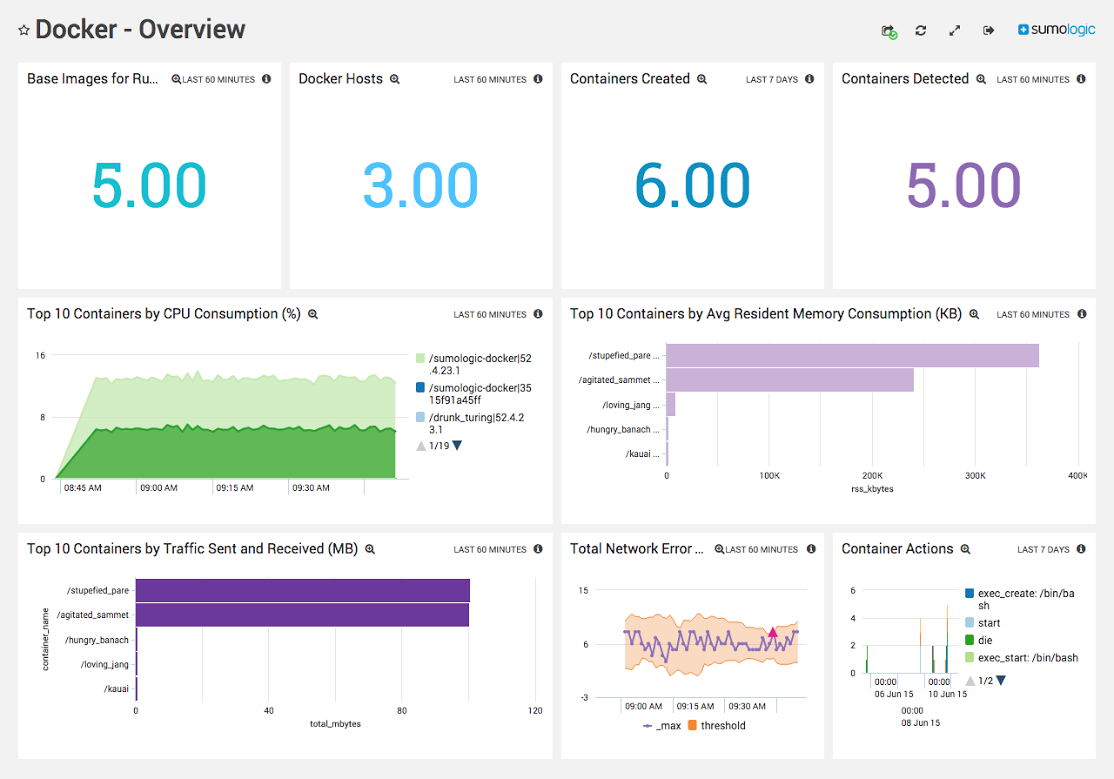

Sumo Logic delivers a comprehensive strategy for the continuous monitoring of Docker infrastructures. You can correlate container events, configuration information and host and daemon logs to get a complete overview of your Docker environment. There's no need to parse different log formats or manage logging dependencies between containers.

Sumo Logic's integration for Docker containers enables IT teams to analyze, troubleshoot and perform root cause analysis of issues surfacing from distributed container-based applications and Docker containers.

Reduce downtime and move from reactive to proactive monitoring.