Get the report

MoreMarch 28, 2023

Authorship -

AI Contribution Score (ACS): 0%

Human: 100%

ChatGPT has taken the world by storm, so much so that we are all left guessing how far this will go. And it’s not a trivial question, as it relates to the future of humanity itself.

On one extreme, technology is increasing rapidly enough to synthesize some of the most fundamental parts of our existence—communicating naturally with one another. That can be a scary thought. Many of us feel like Truman in his artificial world as he saw the button reading, "How's it going to end?" and replied, "I've been wondering the same thing myself lately." The only difference is we are willing participants in creating this artificial world.

On the other extreme, we welcome new powerful tools that help us achieve real results in a short amount of time, giving us our only non-renewable resource – time. As with any new technology, all we can do is assess them as they develop and put in safety measures, or sometimes even countermeasures, and move forward. In the words of the CEO of OpenAI, "We've got to be careful here. I think people should be happy that we are a little bit scared of this." Nowhere is this more true than in the world of cybersecurity itself.

One of the things that make large language models (LLM) so powerful is that a “language” is not limited to the spoken word but also includes programming languages. Because GPT is multilingual, it allows us to ask it a question in English and have it translate the answer into another language, such as Python.

That is a game changer, but not new to ChatGPT. When Salesforce started exploring the possibilities of this over a year ago, Chief Scientist Silvio Savarese explained their project CodeGen:

[With CodeGen] we envisioned a conversational system that is capable of having a discussion with the user to solve a coding problem or task. This discussion takes the form of an English text-based discourse between a human and a machine. The result is AI-generated code that solves the discussed problem.

GitHub has Copilot and Copilot X which are built on GPT-3 and GPT-4 respectively and help developers write and debug their code, generate descriptions of pull requests, automatically tags them, and can provide AI-generated answers about documentation. Extrapolate this out, instead of a human doing the programming, machines are learning to program themselves, with the human providing only high-level guidance. What could possibly go wrong?

In cyber defense, adversaries are driven to discover new vulnerabilities and write exploits against those found and create new tools for breach, extortion, ransomware, remote access, and the rest. Exploit development is a very technical and challenging undertaking, and when exploits are created they are valuable.

These tools are shared on black markets for selling and trading new zero-days, rootkits, and remote access trojans (RATs). Eventually, the defenders catch up and adversary tactics, techniques and procedures (TTPs) become part of the common body of knowledge of attack vectors and are entered into the common vulnerabilities and exposures (CVE) and MITRE ATT&CK hall of fame. Soon after, vendors push detections into their point solutions.

As an example on the defending side, we actively fight to detect malicious binaries by their signatures, often in the form of their unique hash value or pattern matching of binary sequences. Once all the anti-virus vendors identify the malicious signatures, attackers have to find (or create) other tools that can go undetected.

I recall experimenting with a little detection avoidance tool called DSplit. The concept was simple. Split binaries into two, run them both through the AV tool in which you’re hoping to avoid detection, and see which file trips detection. Take the offending half, split it again and rinse and repeat. Eventually, you get down to a small enough chunk of bits that you can quite literally change a 0 to a 1, and then viola! – it passes undetected.

That approach is somewhat shallow, and the reassembled binary may not run as expected, but it brings us back to ChatGPT. What if every malicious developer could create unique code at the outset with minimal coding skills? And that code itself could be polymorphic in nature, always being unique with no static fingerprint or signature. Defenders and security vendors now have to refocus on behavior and anomaly detection. For a deep dive into the threat offensive AI poses to organizations, this paper is worth a read.

Again, this is nothing new, but yesterday's detection strategies are now more stale than ever. It’s critical that tools in the security stack are intelligent enough to correlate across the entire lifecycle of an attack and have an entity-centric view of activity in order to identify malicious patterns of behavior. Detecting the initial attack vector may be ideal, but as it becomes more difficult, our tooling should also adopt proven approaches that rely on behavior-based anomalies.

With the advent of ChatGPT, this type of detection becomes even more important.

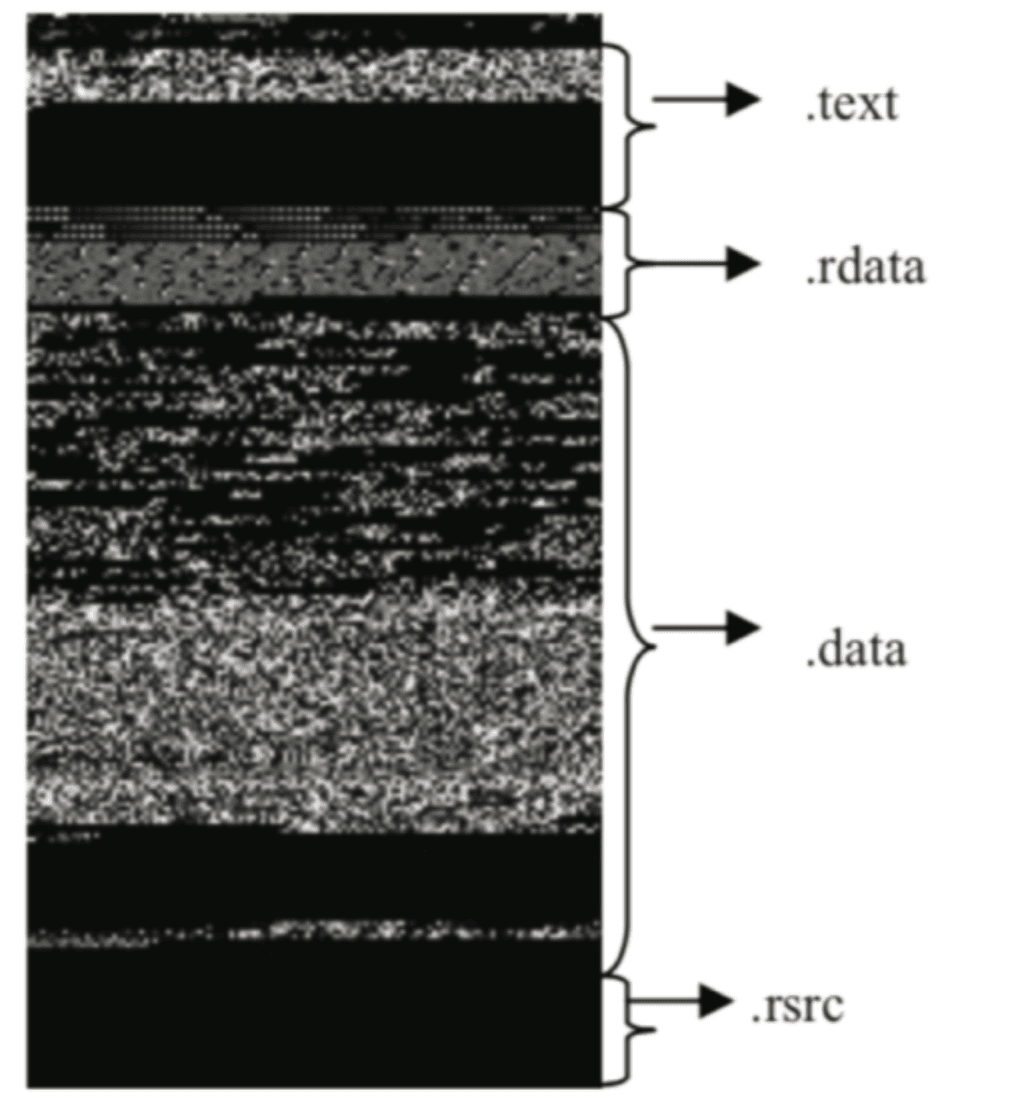

Of course, as the cat-and-mouse game levels up on both sides, machine learning, and AI can also be used in malware image classification at the binary level. In head-splitting terminologies, various machine learning approaches such as support vector machine, k-nearest neighbors, random forests, naive Bayes, and decision trees have been used to detect and classify known malware.

But even more creative than these names are detection techniques where malware binaries have been converted into actual grayscale images, then using convolutional neural network (CNN) models for static malware classification by “computer vision”. In one test, byte plot visualization of malware as grayscale images across 9,342 malware samples achieved 80-99% detection accuracy.

With the recent release of ChatGPT-4, it can “see” and understand images. And this may pave the way for unique ways for it to identify unique malicious code not seen (editor’s note: hah!) before. Around and around we go with AI helping adversaries and defenders alike.

Truth be told, I’ve always been the guy in the back of the room rolling my eyes every time AI/ML is mentioned by a security vendor. I’m doing less eye-rolling these days, as AIOPs have made leaps and bounds. Gartner’s Market Guide for AIOps Platforms detailed what features and use cases AI brings to the table. They defined AIOps as platforms that can analyze telemetry and events, and identify meaningful patterns that provide insights to support proactive responses with five common characteristics:

Cross-domain data ingestion and analytics

Topology assembly from implicit and explicit sources of asset relationship and dependency

Correlation between related or redundant events associated with an incident

Pattern recognition to detect incidents, their leading indicators or probable root cause

Association of probable remediation

I don’t actually think the future will be in AIOps platforms that can ingest huge volumes of telemetry (logs, metrics, traces) and then identify malicious behaviors as they unfold. We already have tools doing this today in the form of security information and event management (SIEMs), security orchestration, automation, and response (SOARs) and user and entity behavior analytics (UEBA).

What I expect to see is fewer AI-dedicated “platform” plays and more adoption of ML functionality across proven security solutions. Here at Sumo Logic, for example, our customers have found value in our ability to analyze global security signals in an obfuscated and anonymized way and then present back to customers a global view of attack vectors targeted against them as it compares to thousands of other similar customers.

These baselines help our customers optimize their security posture based on how unusual their security findings are compared to others. Because we can apply ML to huge data sets not available to siloed security teams, it’s a value add that only comes with a multi-tenant cloud-native offering. Again, expect next-gen offerings to rapidly adopt these features, especially as Google, Apple, Meta, Baidu and Amazon release native services for developers to play with.

More than just that bird's eye view, it’s now very tactical as well for security operations center (SOC) analysts. Every security Insight generated by Sumo Logic’s Cloud SIEM includes a Global Confidence Score which baselines how alerts were previously triaged (true or false positive) by the company's SOC analysts and by other customers' SOC teams. The score is then presented on a scale of 0-100, where the higher the value, the more actionable the alert is. “No score” would mean there is not enough data yet to make any prediction.

We are also in the early stages of an ML-driven rule recommendation feature that will help security engineers determine what severity a security signal should have. As for ChatGPT’s AI, we’re still exploring ways, with trepidation, to incorporate it into our security solutions.

We do see some of our customers already leveraging it successfully for tasks in both analyst workflows and engineering/development, driving that shift-left and DevSecOps approach. I asked M. Scott Ford, Co-Founder & Chief Code Whisperer at CorgiBytes, how he sees things unfold with ChatGPT and AI in modern app development.

Large language models, such as ChatGPT, have a huge potential to be another tool in an application developer's toolbox. For example, members of the Legacy Code Rocks community have been experimenting with using ChatGPT to suggest refactoring improvements. I see the potential for a similar approach being used to detect common programming mistakes that can lead to security vulnerabilities. This can help short-circuit the cat-and-mouse game by helping sanitize the security surface as it's being developed.

How much of this technology works its way into our security stack is to be determined, but taking off our propeller head caps for a moment, it’s important to realize that most attackers aren’t “1337”, or at least don’t need to be. Remember the human is still the weakest link, and the easiest way in may be a carefully crafted phishing email with an attachment. We’ve been painfully trained on how to spot phishing attacks. Speaking of which, I think my mandatory training is overdue.

But the craft of social engineering and phishing is advancing. They are most effective when written by native English speakers and lethal when done using a little humint research against a target, making emails sound realistic and relevant. This means these attacks can’t be done at scale. Until now, thanks to ChatGPT!

It happens to have perfect grammar, can research what a company does, and write extremely realistic emails. What’s even more shocking, is that it has been used to carry on email correspondence with a victim, and only include a malicious attachment after some rapport has been established. And this phishing, vishing (voice phishing), and smishing (SMS text phishing) can all be done programmatically in a scalable way.

Do we need an AI countermeasure to evaluate each message and provide a “human vs AI” rating and focus attention on the action it's trying to lure us into?

Again, not sure who is the cat and who is the mouse anymore, but the double edge sword of AI has led to AI detecting AI. GPTZero for example, claims to be the world's #1 AI detector with over one million users. The app, ironically, uses ChatGPT against itself, checking the text to determine how much “AI involvement” there has been by ChatGPT-like services. Perhaps this will be baked into future email protection solutions.

Other vendors already claim AI/ML email threat detection, but then we're back to the dsplit technique above. Can an attacker simply intentionally misspell a few words and get some grammar incorrect to convince GPTZero the authorship was actually human? Can attackers ask ChatGPT to incorporate common spelling and grammar mistakes into natural language processing (NLP) output?

Although some guardrails are being created to prevent ChatGPT from writing phishing emails or malware, there have been workarounds. One observed use was Telegram bots that leveraged unbounded use of ChatGPT available on dark websites. You could get 20 free sample query options and after that, for every 100 queries, people are being charged $5.50. In some cases, scammers can now craft emails that are so convincing they can extort cash from victims without even relying on malware.

Again, the net of all this is, our detection stack has to be more intelligent and “assume breach”. Track all activity across all entities and identify behavior that is unusual or potentially malicious. It’s never been true that attackers only have to be right once, and defenders have to be right all the time. That only holds true for the initial access. After that, it’s flipped upside down where attackers have to be stealthy all the time as one misstep will trip a wire in a good layered defense strategy.

Platforms like Sumo Logic include traditional detection rules like brute force attempts, reconnaissance detections etc, but also track each individual user and system dynamically creating Signals when “first seen” behavior is observed. Has this user logged in from this location before? Has this machine been remotely accessed in this way previously? Has this user accessed these AWS Secrets before?

The kill-chain requires attackers to eventually create indicators of compromise, regardless of the initial infection vector. Thus, it’s not more about shifting up, than it is about shifting left.

There is no doubt research is being done at all levels on how to leverage AI in securing our national infrastructure and leveling up our cyber defenders. Truly, there are many areas that ChatGPT can be woven into the defenders workflow. And once a security program is built on top of a platform with intelligent automation, we enter the art of the possible.

Eventually, we will be able to use ChatGPT to feed in a lot of detections to make some intelligent determinations… but we are not there yet. And the best approach is to take a use-case-driven approach to solve pain points individually and programmatically.

Instead of navigating to Ultimate Windows Security to search the thousands of Windows Event-IDs and what they mean, simply have ChatGPT provide you with a summary automatically as part of the workflow.

Instead of trying to understand what an obfuscated power-shell command is doing, feed it to ChatGPT and have it run its analysis, and provide that as an enrichment.

Instead of continuously reinventing the wheel as we create incident response plans and security/governance policies, ask ChatGPT to write the initial drafts and simply review the output. If you already have a mature security program, take existing documents (sanitize it first) and ask ChatGPT to identify any potential weaknesses or gaps.

Instead of waiting on a vendor to build a much-needed integration, ask ChatGPT for help. With the rise of “bring your own code” automation and orchestration tools, security analysts with little to no dev experience can ask ChatGPT to create integrations for them. The majority of the time a playbook or enrichment is simply a script with API calls written in Python or Go. Imagine a developer asking ChatGPT “Please create a script to integrate with a MISP threat intelligence server and prompt for required data”, and with little to no tweaking an integration is up and running.

Now for some serious disclaimers. ChatGPT is fallible. It may even argue with you that it’s perfect, and insist it doesn’t make mistakes, but that should only increase your caution when incorporating it into your SecOps workflows. It’s imperative we understand the inherent weaknesses of ChatGPT or LLM in general. Nothing it says should be treated as “actionable” unless verified by your in-house correlation engine or human analysts.

We already know from benchmarks, the training set of data on which AI relies can be contaminated and incorrect. No matter how good you think your defenses are, it’s imperative you simulate real-world attacks and continuously verify what your security controls will prevent, detect, or miss. Red-teaming, blue-teaming, purple-teaming, and breach and attack simulation (BAS) solutions are necessary to validate your investments in SIEM, UEBA, and SOAR solutions.

In addition, know that NOTHING IS PRIVATE when it comes to conversing and interacting with ChatGPT. Per the OpenAI agreements and policies, all is fair game with data submitted as the confidentiality protection is solely in OpenAI’s favor. That means that neither the inputs provided to OpenAI nor the output it produces are treated as confidential by OpenAI.

There is a definite risk here of developers or analysts inadvertently leaking sensitive information and unknowingly thinking there is some sort of SaaS-type privacy agreement in place. Just recently some users discovered that they could see the titles of other people's chat histories causing the platform to be taken down for ten hours. This is the wild-west, and remember many components of ChatGPT are still in beta.

In closing, we are rapidly moving from an AI-assisted to an AI-led way of operating. We recommend carving out time across your teams to review how to best defend against and defend with burgeoning AI technology. Try to avoid chasing after the next shiny thing. Focus on continuous improvement and trusted technologies and partners. Economic headwinds demand we do more with less, and ChatGPT may prove to be a good partner in some unexpected ways, even with the unforeseen risks it introduces.

How will the open source community respond to level up defenders on this new threat type? Learn more about the advancements of open-source security solutions.

Reduce downtime and move from reactive to proactive monitoring.

Build, run, and secure modern applications and cloud infrastructures.

Start free trial